Recently, I have been working on improving Khan Academy’s user knowledge model to get better predictions on how each student will perform on exercises. We use this model for many things including assessing a student’s mastery of an exercise, and recommending the next piece of content that they work through. The following is an overview of the model, with a link to the full write-up of the work I did to improve and measure it at the bottom. This write-up was meant for an internal audience, but I thought it may be interesting to others as well. Let me know if you have any questions or ideas for improvements!

————————————————————————————————

Khan Academy models each student’s total knowledge state with a single 100-dimensional vector. This vector is obtained by the artful combination of many other 100-dimensional vectors, depending on how that student has interacted with exercises in the past. Furthermore, we model a student’s interaction with a single exercise with a 6-dimensional vector for every exercise that student has interacted with.

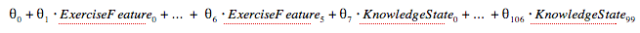

These feature vectors allow us to build the following statistical model to predict a student’s ability to correctly answer the next question in an exercise, even if the “next question” is the very first for that exercise.

To make a prediction, we lookup that student’s exercise-specific features and their global knowledge state features, and multiply each one by the corresponding theta. So, our job is the find the values for those 107 theta values which will give us the highest likelihood of correctly predicting a student’s success on the next question in an exercise. A different set of theta values is found for each exercise. This allows each exercise to weight aspects of the KnowledgeState differently. The KnowledgeState should only influence predictions for exercises that are highly correlated to the exercises it is composed of.

If we compute the likelihood that a student will get the next problem correct for all exercises, we can sort the list of exercises by these likelihoods to understand which exercises are more or less difficult for this student and recommend content accordingly. One way we use this list is to offer the student “challenge cards”. Challenge cards allow the student to quickly achieve “mastery” since their history in other exercises shows us that they probably already know this exercise well.

The 100-dimensional vectors are known as random components. There is one random component vector for each exercise known when the values are discovered. The vectors are computed deterministically and stored in a database alongside the theta values.

This means that a student’s performance on an exercise that was added to the site after a set of theta values were discovered will not influence any other exercise’s prediction. It cannot be added to the KnowledgeState because the random components for this exercise do not exist. It also means that we cannot predict a student’s success on this new exercise. Theta values for this exercise do not exist. When a student’s predicted success is null, the exercise is said to be “infinitely difficult”.

The thetas we are using today were discovered in early 2013, which means that they do not account for the all of the new ways students are using the site (e.g. via the Learning Dashboard).

This project sets about to achieve two goals:

-

Upgrade the KnowledgeState mechanism so that it can understand how newly added exercises influence a student’s total knowledge state. Technically, this means computing new random component vectors, and using them during the discovery process.

- Discover new values which will understand all of the new ways students use the website along with all of the new exercises that have been added since they were last discovered.

Click here to read the full details on the data collection, verification, performance analysis and conclusions of this project.

You are very close to determining intelligence quotients (IQs) of the pupils. This can be a means of identifying exceptionally high IQs. Those idenified provide those with the means to underwrite their education to their maximum potential.

LikeLike

What are some of the exercise features? Things like “has decimals” or “two digit vs. one digit” ?

I would think the predictive ability would depend much on the instruction.

Have you given any thought to how this would affect whether students approach a problem from a “memorize the procedure” to “understand the concept” perspective?

LikeLike

The ExerciseState features are simply a representation of how well the student has done in past attempts on that exercise, as described in David’s blog post. The characteristics you mention should be represented within the global KnowledgeState by way of correlating exercises into groups. For example, if you’ve done well on one digit addition, that will influence the prediction for two digit addition much more than, say, absolute values.

We do think a lot about short-term vs. long-term learning. A tool we use to measure conceptual understanding is an occasional “analytics card” that shows up in a student’s mastery challenge. This card is chosen completely randomly from all of the math content. The student should get more and more of these questions correct as they understand more math. Another tool we use to reinforce conceptual learning is with spaced repetition. We only allow promotion to higher mastery levels after a certain amount of time, and after that we send the student “review” cards over an even longer amount of time to make sure they are continually refreshed on the concept.

LikeLike

When analyzing learning curves, do you experience a problem that stronger students only practice a skill 5-10 times before passing an objective, whereas weaker students practice it many more times (thus making the learning curve appear flatter than it should be?). I came across this article in the 2014 Educational Data Mining Conference Proceedings that might be of interest:

Click to access 52_EDM-2014-Full.pdf

LikeLike